Powering next-generation intelligence from ground to cloud.

Prompt Workbench, Inference Endpoints, Fine-Tuning

Slurm, Nscale Kubernetes Services, Instances

Infrastructure Services

Control Center, Observability, Radar API

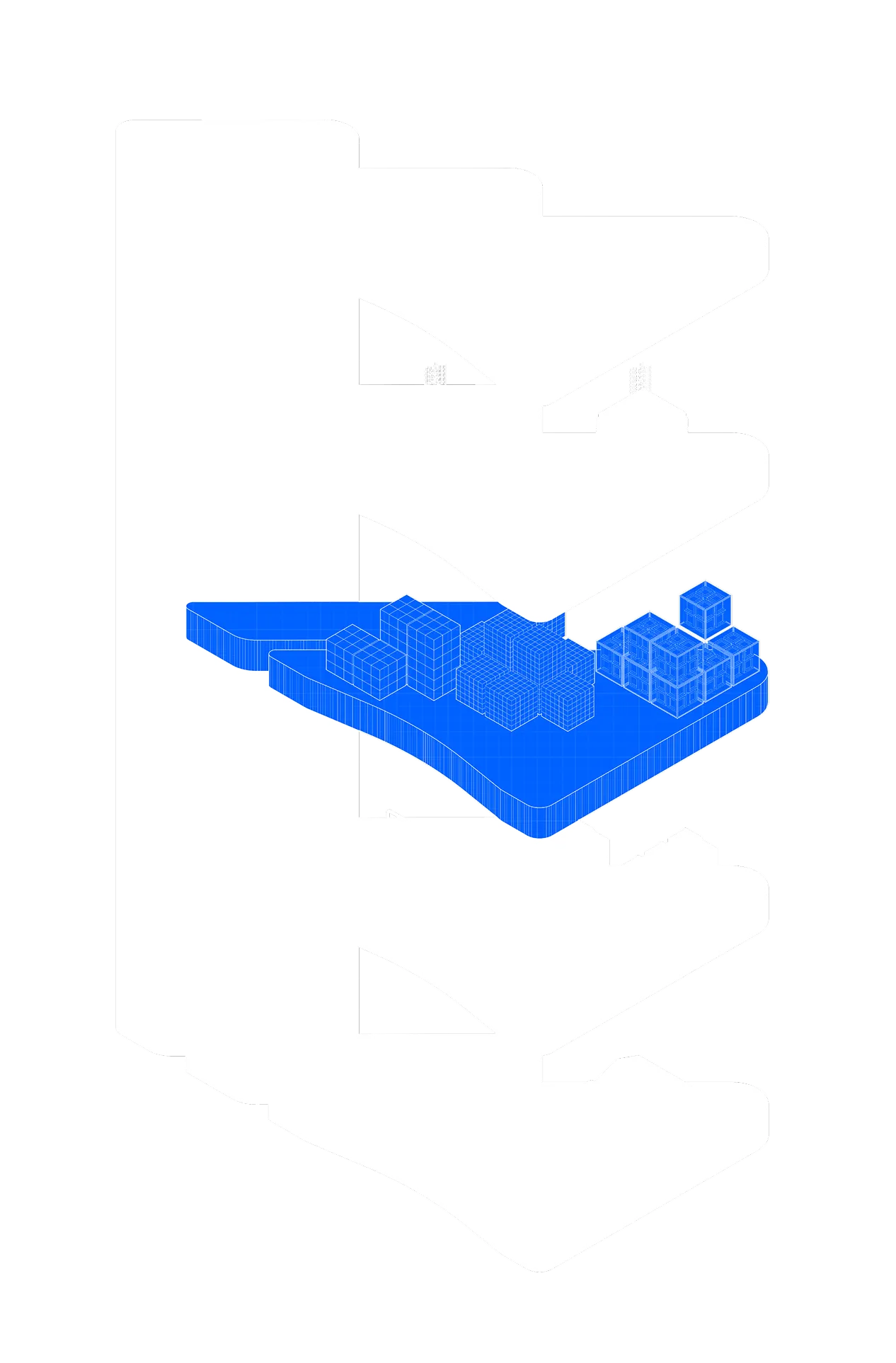

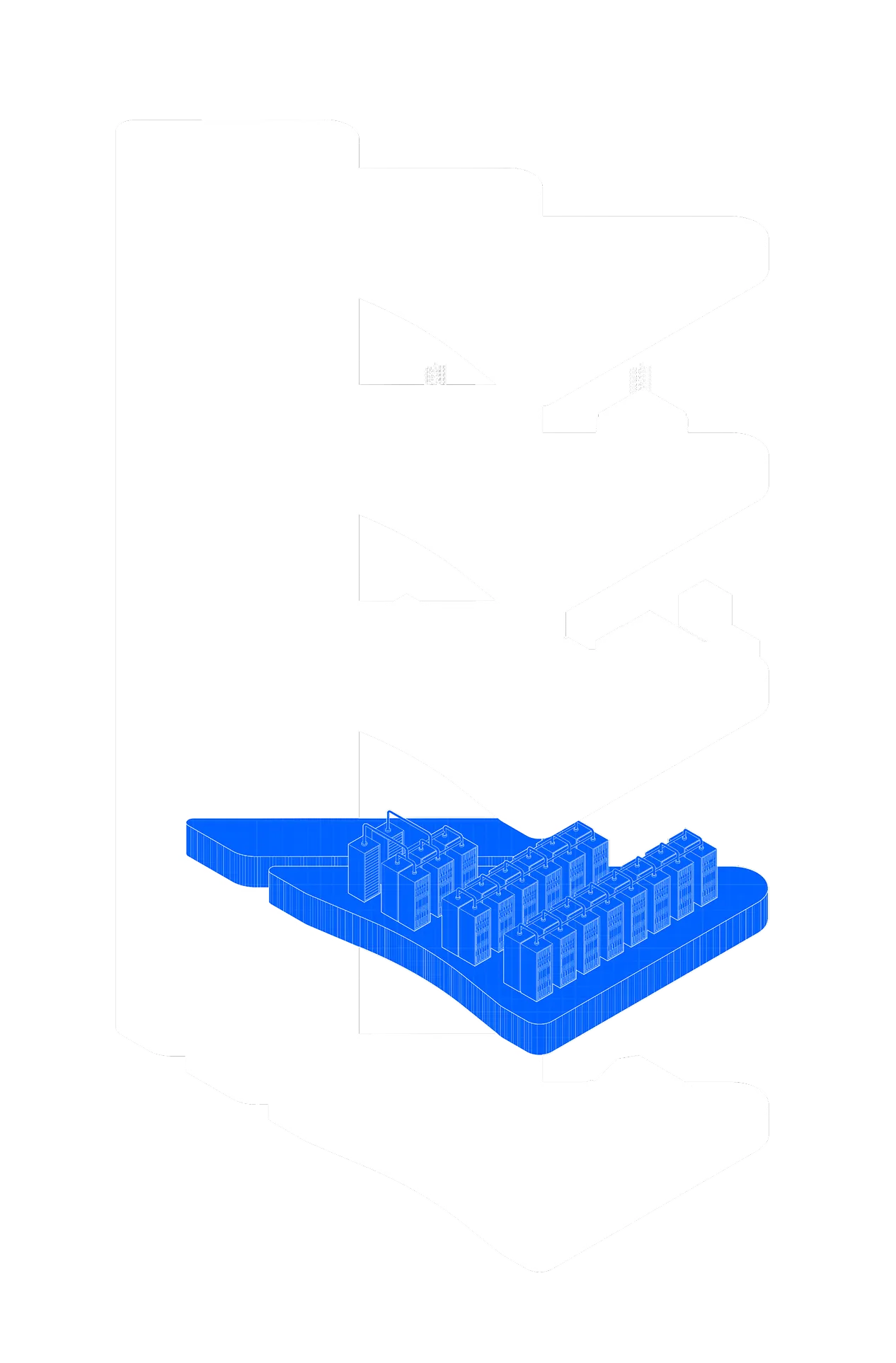

Modular, Sovereign, Sustainably-powered

Inference endpoints, fine-tuning workflows, and a unified workbench for prompt engineering.

Virtual Machines or bare metal nodes. Deploy using Nscale Kubernetes Service or Slurm clusters.

High-throughput, low-latency backbone engineered for AI and High Performance Computing workloads.

Automated system-wide configuration control, health monitoring, and infrastructure lifecycle management.

Advanced sovereign and sustainable data centers anchor the stack with future-proof, modular facilities.

.png)

Faster iteration, lower cost, and reliable scaling through unified workflows, from prompt engineering to production-grade inference. Powered by the most efficient AI infrastructure for advanced AI systems.

Inference endpoints, fine-tuning workflows, and a unified workbench for prompt engineering.

Launch inference in minutes while eliminating cluster management overhead with an autoscaling inference layer backed by Nscale-managed GPU clusters.

Customize frontier models to your domain quickly and efficiently, reduce reliance on generic models, and accelerate the path from POC to production with serverless, API-driven fine-tuning pipelines.

Experiment and optimize prompts quickly without burning GPU hours, accelerating time-to-prototype through a browser-based workbench with versioned prompts and real-time model feedback

Instances are available as virtual machines (VMs) or bare metal nodes, with the option to orchestrate deployments using Nscale Kubernetes Service (NKS) or Slurm clusters.

Make queue times predictable for teams to manage mixed workloads with confidence, delivered by Nvidia’s Slinky — an HPC-grade batch scheduling service that runs Slurm on Kubernetes and is tuned for large-scale GPU workloads.

Ensure production readiness by provisioning isolated Kubernetes environments in under two minutes for rapid testing, and production-ready training that reduces operational risk through GPU-aware scheduling, seamless autoscaling, and enterprise-grade security.

Get maximum performance for intensive workloads by choosing bare-metal nodes or the flexibility and convenience of virtual machines, delivered by Nscale-managed lifecycle controllers, prebuilt AI images, and optional VPC isolation.

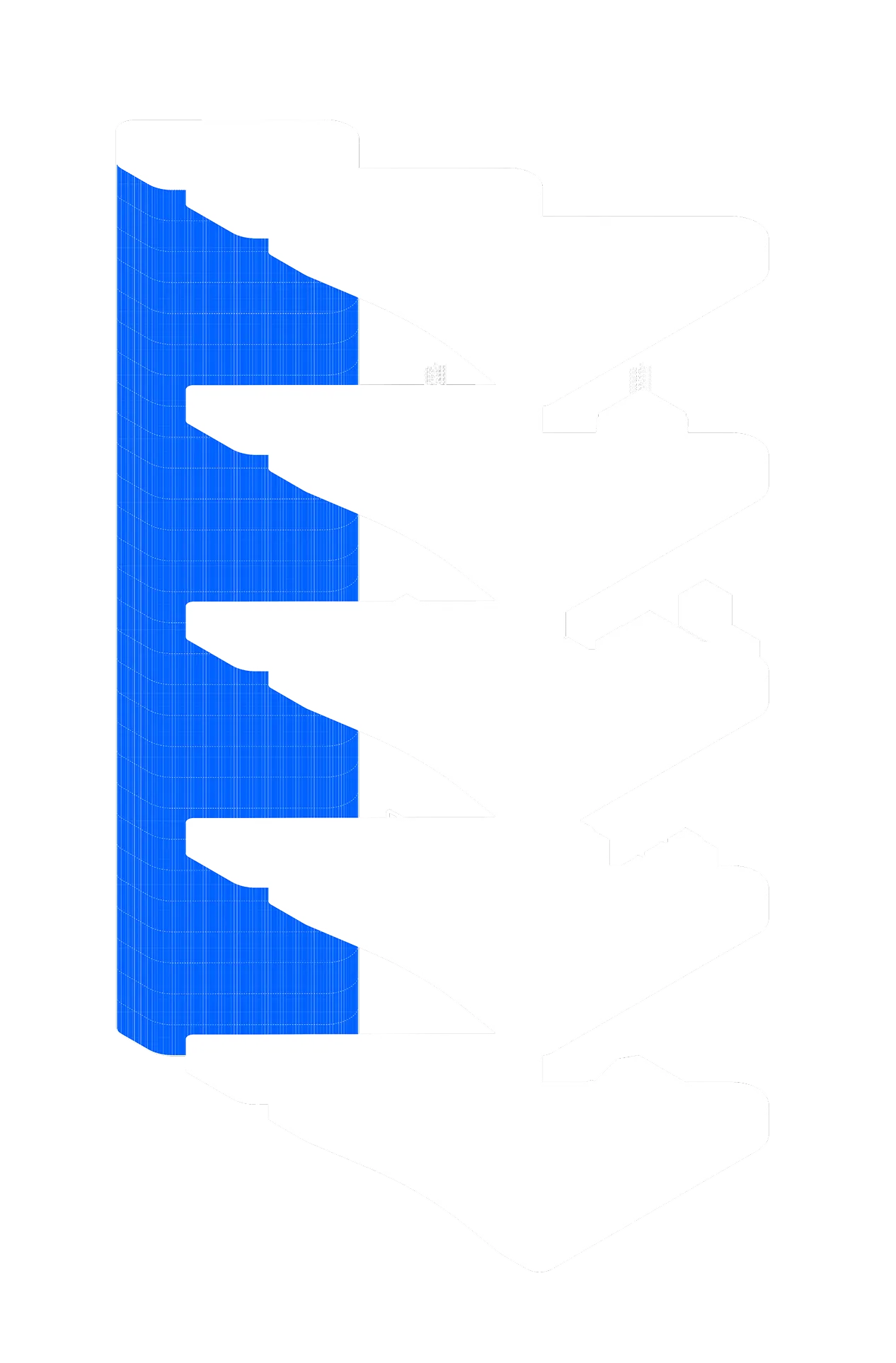

High-throughput, low-latency backbone engineered for AI and High Performance Computing (HPC) workloads.

Maximise GPU efficiency and utilisation to lower cost per run and accelerate experiments, delivered as raw bare-metal nodes on the latest-generation of NVIDIA GPUs optimised for large-scale training, fine-tuning and inference.

Prevent slowdowns that delay product launches by ensuring predictable throughput for training and inference at scale, delivered by parallel, AI-optimised storage tiers with GPU-tuned distributed file systems and a low-latency design.

Scale training from dozens to thousands of GPUs with no network bottlenecks, thanks to RDMA/InfiniBand/NVLink fabrics, multi-rack topology and low-latency interconnects.

Automated system-wide configuration control, health monitoring, and infrastructure lifecycle management for maximum GPU utilization at scale.

Cut operational overhead and maximize GPU efficiency and utilization with a unified lifecycle manager that automates provisioning, scaling and patching, tracks node health and triggers remediation workflows.

Gain end-to-end visibility into workloads to ensure predictable performance, cost accountability, and regulatory compliance, powered by telemetry across compute, storage, and networking with built-in dashboards, alerts, and integrated reporting.

Unlock real-time GPU resource governance and repair visibility for confident capacity planning. Radar API exposes availability, repair metrics, resource stats, and maintenance notices through one unified API.

Our global footprint of advanced sovereign and sustainable data centers anchor the stack with future-proof and modular facilities.

Predictable capacity provided by modular, multi-megawatt data centers with sovereign controls.