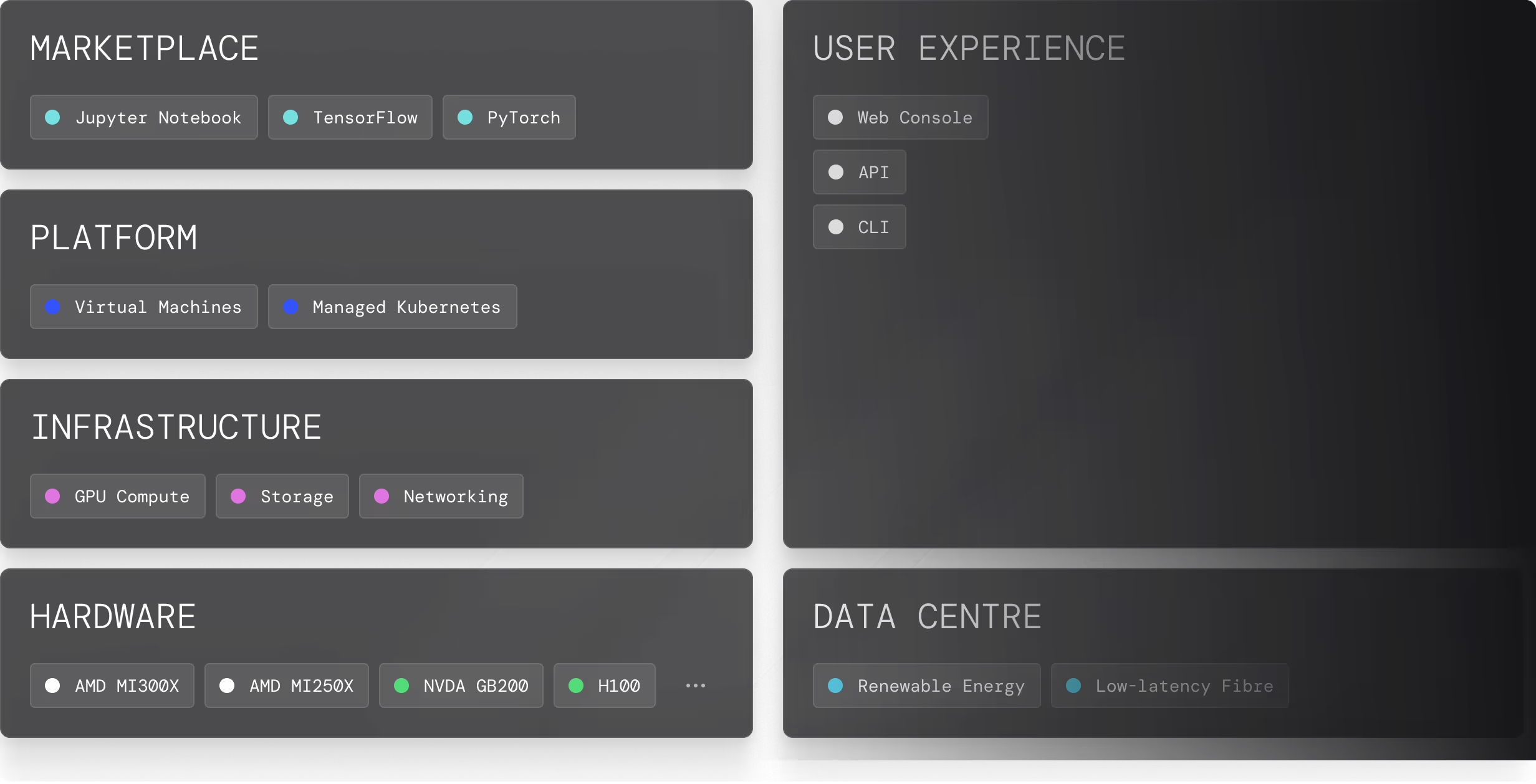

Experience lightning-fast inference with Nscale Cloud's seamless integrations with the latest AI frameworks including TensorFlow Serving, PyTorch, and ONNX Runtime.

Products

AI Services

Develop, train, tune, and deploy AI using our on demand services.

Get Started

Serverless Inference

API endpoints for instant and scalable AI inference.

Fine-tuning

On-demand, serverless fine-tuning

AI Private Cloud

Reserved large scale GPU clusters purpose-built for AI.

Talk to sales

Training Clusters

Easy to deploy GPU clusters that utilise the SLURM scheduler.

Inference Clusters

Autoscaling dedicated inference clusters for speed and scalability.

Bare Metal Clusters

Scalable, high performance bare metal GPU clusters engineered for AI.

AI Factories

Discover the data centres powering the future of innovation.

Talk to Sales

Sovereign cloud

Hyperscaler performance, with sovereign governance and control.

Glomfjord

Powered by 100% renewable energy and located in the arctic circle.

Narvik

Stargate Norway, an Nscale–Aker joint venture DC in the arctic circle.

Solutions

Contact

Docs