Looking for low-cost open-source AI models? Look no further, you can integrate the Nscale Inference API into Cursor IDE, which will completely change your coding experience. The best part? Nscale’s Inference API uses an OpenAI-compatible format, making it that much easier to plug into tools like Cursor without the complexities.

Cursor IDE directly integrates AI assistance within your coding workflow allowing fast code generation, intelligent debugging and context aware suggestions, and other benefits that enhance the development experience.

In this step-by-step guide, we will walk you through how to set up and start using Nscale-hosted models inside Cursor.

Prerequisite:

To follow this guide you will need:

- Cursor installed on your device.

- A Cursor account

- An account for Nscale serverless

- An Nscale API key

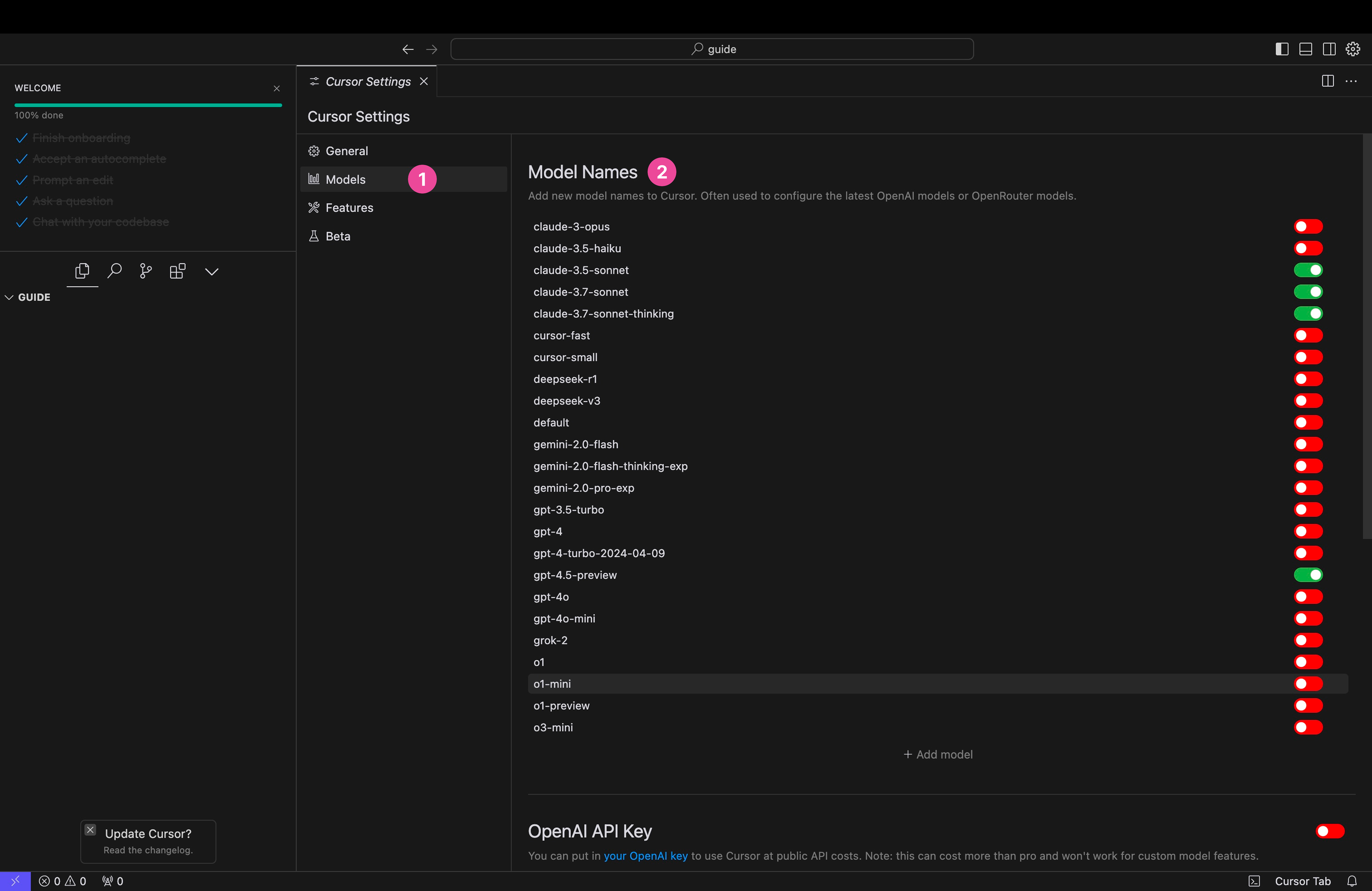

Step 1: Open Cursor Settings

- Open Cursor

- Open cursor settings under Cursor > Settings > Cursor Settings

Step 2: Navigate to Models Section

- In the Cursor Settings sidebar, select "Models"

- This will display various model configuration options

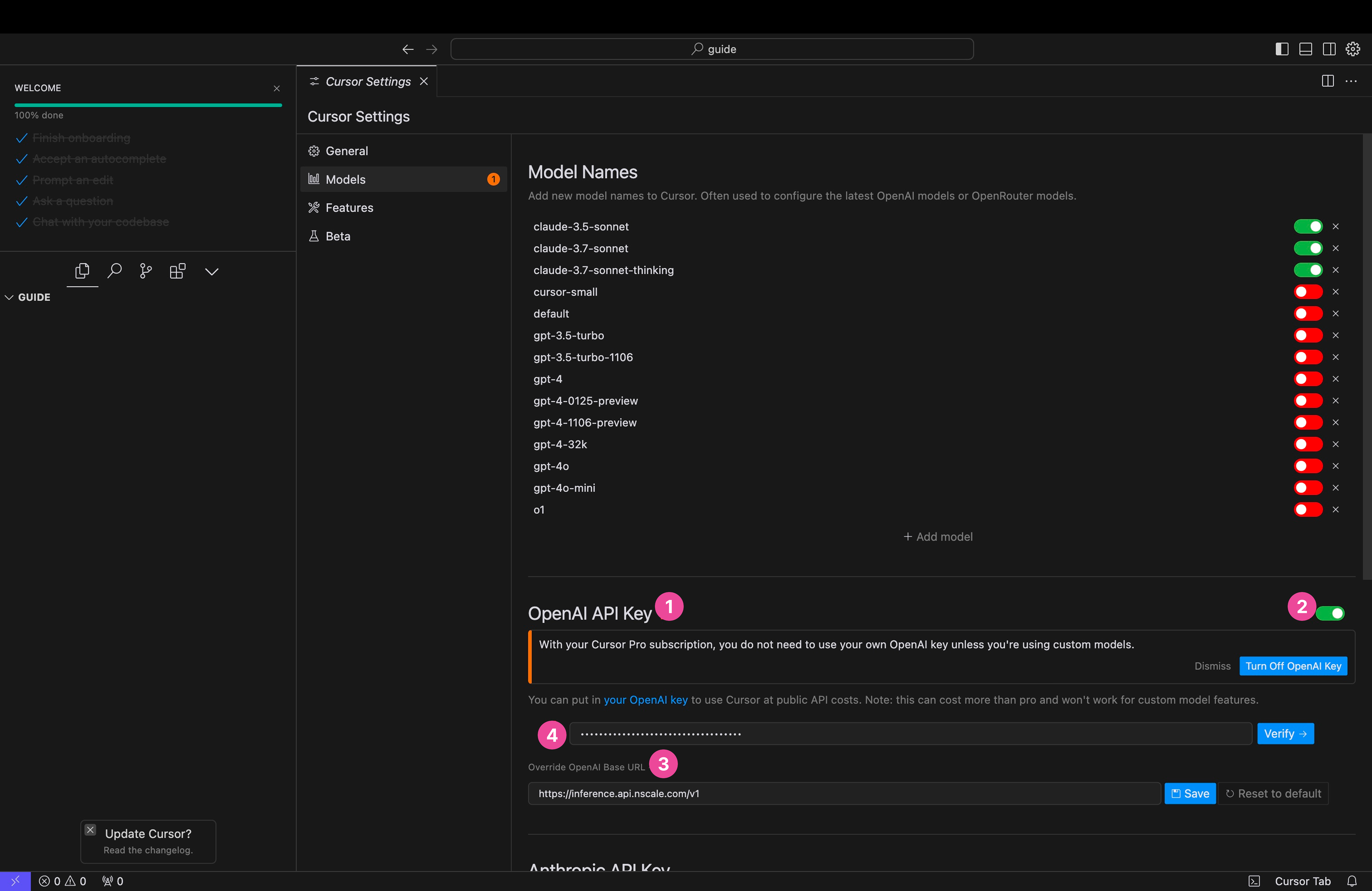

Step 3: Configure Custom Inference Endpoint and API Key

- Scroll down to the "OpenAI API Key" section

- Toggle the switch to enable the OpenAI API Key functionality, when prompted with “Enable OpenAI API Key” click “Enable”

- Click on “Override OpenAI Base URL” and override it with Nscale’s inference endpoint: https://inference.api.nscale.com/v1

- Input your Nscale API key

- Disable all models

- Click the Verify button to check your configuration

- From here you can add any model hosted by Nscale by clicking “+ Add model” and entering the model identifier you wish to use, e.g.

Qwen/Qwen2.5-Coder-32B-Instruct

Troubleshooting: Cursor requires you to disable any model not provided by your custom endpoint - ensure you only have Nscale models enabled

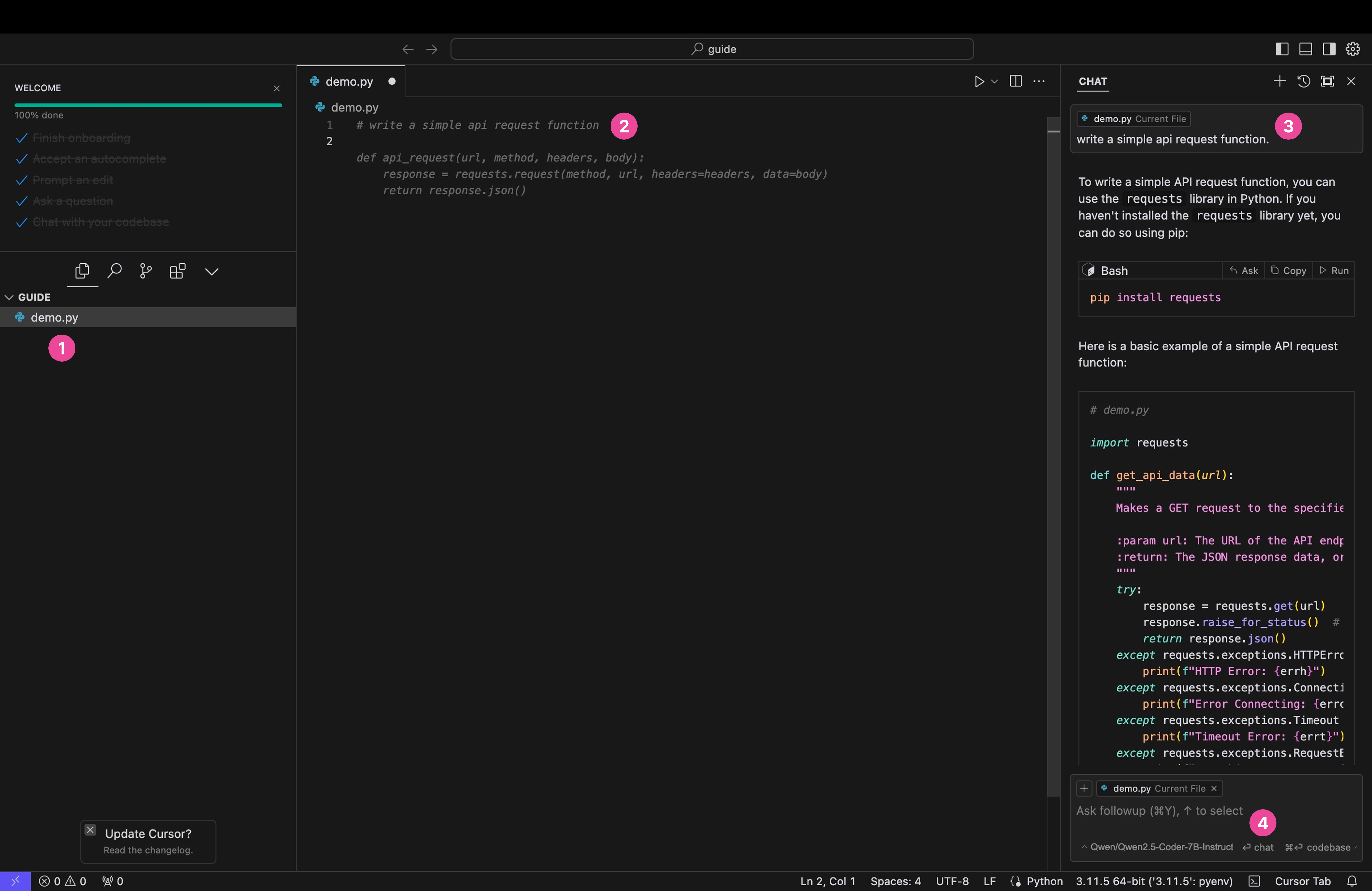

Step 4: Test Your Integration

- Open or create a file

- Test the autocompletion capabilities by writing an instruction in a comment.

- Test the chat feature by asking a question about your code

- Make sure you have access to all the models you have selected

Step 5: Reverting back to the original settings

- Under OpenAI API Key click on Reset to default

- Disable OpenAI API Key

- Disable Nscale hosted models and re-enable your choice of models

-min.png)

Conclusion

You should be all set! Your Cursor IDE is now configured to run custom-hosted models on Nscale.

Take advantage of our $5 free credit to level-up your AI-driven development workflow today. Sign up now here.

.png)

.png)